一、环境准备

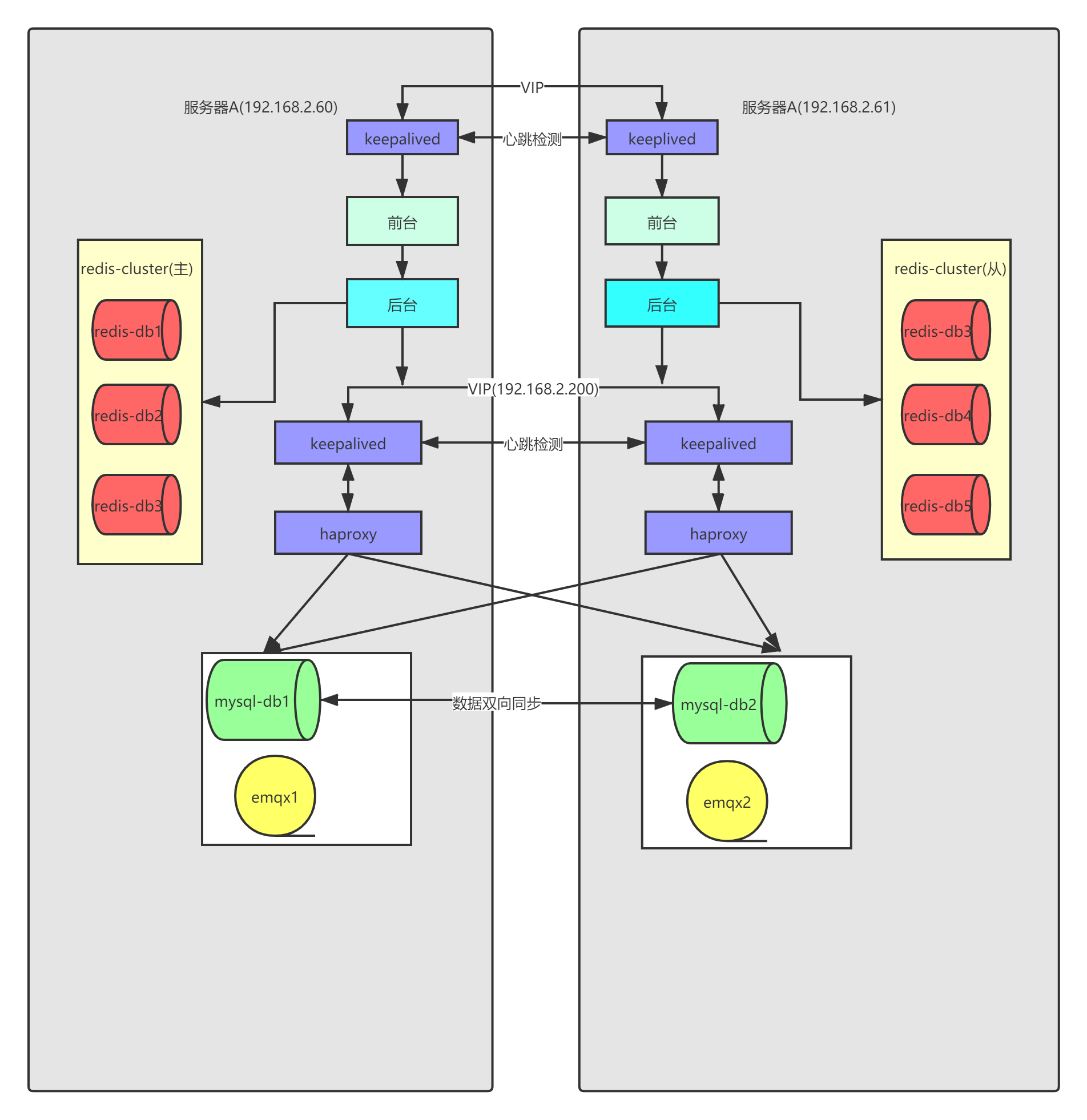

1.两台服务器

192.168.2.60 master

192.168.2.61 slave

192.168.2.100 VIP

2.安装包

Percona-XtraDB-Cluster-57-5.7.32-31.47.1.el7.x86_64.rpm

redis-4.0.9.tar.gz

emqx-centos7-4.2.5-aarch64.rpm

3.开防火墙端口

端口(6309、3306、4444、4567、4568、6379、1883、8883、8083、8084、8081、18083、4369、4370、5369、6369、11083、18081、6883、7000~7005)

firewall-cmd --zone=public --add-port=3306/tcp --permanent firewall-cmd --zone=public --add-port=6379/tcp --permanent1.2.

二、创建mysql的PXC集群

1.关闭selinux

vi /etc/selinux/config1.

把selinux属性值设置成disabled

reboot1.

2.在两台服务器上安装pxc

[root@localhost pxc]# yum install -y Percona-XtraDB-Cluster-57-5.7.32-31.47.1.el7.x86_64.rpm1.

3.修改配置

修改每个PXC节点的/etc/my.cnf文件(在不同节点上,注意调整文件内容)

[mysqld] server-id=1 #id全局唯一 datadir=/var/lib/mysql socket=/var/lib/mysql/mysql.sock log-error=/var/log/mysqld.log pid-file=/var/run/mysqld/mysqld.pid log-bin log_slave_updates expire_logs_days=7 character_set_server=utf8 bind-address=0.0.0.0 #跳过DNS解析 skip-name-resolve #Disabling symbolic-links is recommended to prevent assorted security risks symbolic-links=0 #初始化密码前加入上面的配置,账号密码初始化结束后,关闭mysql,加入下面的配置,再启动mysql wsrep_provider=/usr/lib64/galera3/libgalera_smm.so wsrep_cluster_name=pxc-cluster wsrep_cluster_address=gcomm://192.168.2.60,192.168.2.61 wsrep_node_name=pxc1 wsrep_node_address=192.168.2.60 wsrep_sst_method=xtrabackup-v2 wsrep_sst_auth=admin:Abc_123456 #mysql账号,密码 pxc_strict_mode=ENFORCING binlog_format=ROW default_storage_engine=InnoDB innodb_autoinc_lock_mode=21.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.26.27.28.29.

4.主节点的管理命令

(第一个启动的PXC节点)

systemctl start mysql@bootstrap.service systemctl stop mysql@bootstrap.service systemctl restart mysql@bootstrap.service1.2.3.

5.非主节点的管理命令

(非第一个启动的PXC节点)

systemctl start mysql1.

6.查看集群状态

mysql> show status like 'wsrep_cluster%'; +--------------------------+--------------------------------------+ | Variable_name | Value | +--------------------------+--------------------------------------+ | wsrep_cluster_weight | 2 | | wsrep_cluster_conf_id | 2 | | wsrep_cluster_size | 2 | | wsrep_cluster_state_uuid | 89b42550-75bb-11eb-9b2c-6fca85919899 | | wsrep_cluster_status | Primary | +--------------------------+--------------------------------------+ 5 rows in set (0.00 sec)1.2.3.4.5.6.7.8.9.10.11.

注意:如果最后关闭的PXC节点不是安全退出的,那么要先修改/var/lib/mysql/grastate.dat 文件,把其中的safe_to_bootstrap属性值设置为1,再安装主节点启动

7.初始化mysql数据库

7.1 查看MySQL初始密码

cat /var/log/mysqld.log | grep "A temporary password"1.

7.2 修改MySQL密码

mysql_secure_installation1.

7.3 连接mysql,创建远程管理员账户

CREATE USER 'admin'@'%' IDENTIFIED BY 'Abc_123456'; GRANT all privileges ON *.* TO 'admin'@'%'; FLUSH PRIVILEGES;1.2.3.

三、创建redis集群

节点 | 端口号 | 主/从节点 |

192.168.2.60 | 7000 | |

192.168.2.60 | 7001 | |

192.168.2.60 | 7002 | |

192.168.2.61 | 7003 | |

192.168.2.61 | 7004 | |

192.168.2.61 | 7005 |

1.安装gcc环境

[root@localhost local]# yum install -y gcc1.

2.安装redis

tar -zxvf redis-6.2.0.tar.gz ###解压 cd redis-6.2.0 make ###编译 make install PREFIX=/usr/local/redis-cluster ###安装redis cd /usr/local/redis-cluster/bin cp * /usr/local/redis-cluster cp /opt/redis/redis-6.2.0/redis.conf /usr/local/redis-cluster/conf/ ###拷贝redis配置文件到redis安装目录1.2.3.4.5.6.7.

2.新建如下目录和文件

master

[root@localhost redis-cluster]# tree -L 2 . ├── conf │ ├── redis-7000.conf │ ├── redis-7001.conf │ └── redis-7002.conf ├── data │ ├── redis-7000 │ ├── redis-7001 │ └── redis-7002 ├── log │ ├── redis-7000.log │ ├── redis-7001.log │ └── redis-7002.log1.2.3.4.5.6.7.8.9.10.11.12.13.14.

slave

[root@localhost redis-cluster]# tree -L 2 . ├── conf │ ├── redis-7003.conf │ ├── redis-7004.conf │ └── redis-7005.conf ├── data │ ├── redis-7003 │ ├── redis-7004 │ └── redis-7005 ├── log │ ├── redis-7003.log │ ├── redis-7004.log │ └── redis-7005.log1.2.3.4.5.6.7.8.9.10.11.12.13.14.

3.修改redis配置

redis-700*.conf

# bind 127.0.0.1 # redis后台运行 daemonize yes # 数据存放目录 dir /usr/local/redis-cluster/data/redis-7000 # 日志文件 logfile /usr/local/redis-cluster/log/redis-7000.log # 端口号 port 7000 # 开启集群模式 cluster-enabled yes # 集群的配置,配置文件首次启动自动生成 # 这里只需指定文件名即可,集群启动成功后会自动在data目录下创建 cluster-config-file "nodes-7000.conf" # 请求超时,设置10秒 cluster-node-timeout 100001.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.

4.启动redis

sudo ./redis-server conf/redis-7000.conf sudo ./redis-server conf/redis-7001.conf sudo ./redis-server conf/redis-7002.conf sudo ./redis-server conf/redis-7003.conf sudo ./redis-server conf/redis-7004.conf sudo ./redis-server conf/redis-7005.conf1.2.3.4.5.6.

5.创建集群

[root@localhost redis-cluster]# ./redis-cli --cluster create 192.168.2.60:7000 192.168.2.60:7001 192.168.2.60:7002 192.168.2.61:7003 192.168.2.61:7004 192.168.2.61:7005 --cluster-replicas 1 >>> Performing hash slots allocation on 6 nodes... Master[0] -> Slots 0 - 5460 Master[1] -> Slots 5461 - 10922 Master[2] -> Slots 10923 - 16383 Adding replica 192.168.2.61:7005 to 192.168.2.60:7000 Adding replica 192.168.2.60:7002 to 192.168.2.61:7003 Adding replica 192.168.2.61:7004 to 192.168.2.60:7001 M: 878a4ba824f79b83f63a72a427667bf2369e5d8c 192.168.2.60:7000 slots:[0-5460] (5461 slots) master M: 0009436563cd78540c39037048a512b073f591ef 192.168.2.60:7001 slots:[10923-16383] (5461 slots) master S: c1475a3103ebf50680e40e4cd853e032a7911dd2 192.168.2.60:7002 replicates 1639037fd13489e9d9abd5dcb054535857685c94 M: 1639037fd13489e9d9abd5dcb054535857685c94 192.168.2.61:7003 slots:[5461-10922] (5462 slots) master S: 67ccd67c037bcd4fde2e34ab045768637b1dc1b4 192.168.2.61:7004 replicates 0009436563cd78540c39037048a512b073f591ef S: 85ad89a175605cf3fe81164b340d5a788a4b7512 192.168.2.61:7005 replicates 878a4ba824f79b83f63a72a427667bf2369e5d8c Can I set the above configuration? (type 'yes' to accept): yes >>> Nodes configuration updated >>> Assign a different config epoch to each node >>> Sending CLUSTER MEET messages to join the cluster Waiting for the cluster to join . >>> Performing Cluster Check (using node 192.168.2.60:7000) M: 878a4ba824f79b83f63a72a427667bf2369e5d8c 192.168.2.60:7000 slots:[0-5460] (5461 slots) master 1 additional replica(s) S: 67ccd67c037bcd4fde2e34ab045768637b1dc1b4 192.168.2.61:7004 slots: (0 slots) slave replicates 0009436563cd78540c39037048a512b073f591ef S: c1475a3103ebf50680e40e4cd853e032a7911dd2 192.168.2.60:7002 slots: (0 slots) slave replicates 1639037fd13489e9d9abd5dcb054535857685c94 M: 0009436563cd78540c39037048a512b073f591ef 192.168.2.60:7001 slots:[10923-16383] (5461 slots) master 1 additional replica(s) S: 85ad89a175605cf3fe81164b340d5a788a4b7512 192.168.2.61:7005 slots: (0 slots) slave replicates 878a4ba824f79b83f63a72a427667bf2369e5d8c M: 1639037fd13489e9d9abd5dcb054535857685c94 192.168.2.61:7003 slots:[5461-10922] (5462 slots) master 1 additional replica(s) [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.26.27.28.29.30.31.32.33.34.35.36.37.38.39.40.41.42.43.44.45.46.47.48.49.

6.查看集群中的主节点

[root@localhost redis-cluster]# ./redis-cli -c -p 7000 cluster nodes| grep master c1475a3103ebf50680e40e4cd853e032a7911dd2 192.168.2.60:7002@17002 master - 0 1614582010000 7 connected 5461-10922 0009436563cd78540c39037048a512b073f591ef 192.168.2.60:7001@17001 master - 0 1614582009000 2 connected 10923-16383 878a4ba824f79b83f63a72a427667bf2369e5d8c 192.168.2.60:7000@17000 myself,master - 0 1614582008000 1 connected 0-54601.2.3.4.

四、创建emqx集群

1.安装emqx

curl https://repos.emqx.io/install_emqx.sh | bash1.

2.后台启动emqx

[root@localhost emqx]# emqx start EMQ X Broker 4.2.7 is started successfully!1.2.

3.查看emqx状态

[root@localhost emqx]# emqx_ctl status Node 'emqx@127.0.0.1' is started emqx 4.2.7 is running1.2.3.

4.配置emqx节点

vi /etc/emqx/emqx.conf1.

master节点

node.name = emqx@192.168.2.601.

slave节点

node.name = emqx@192.168.2.611.

5.节点加入集群

启动两个节点emqx后在slave节点执行

[root@localhost emqx]# emqx_ctl cluster join emqx@192.168.2.60

=CRITICAL REPORT==== 25-Feb-2021::10:36:14.383494 ===

[EMQ X] emqx shutdown for join

Join the cluster successfully.

Cluster status: #{running_nodes => ['emqx@192.168.2.60','emqx@192.168.2.61'],

stopped_nodes => []}1.2.3.4.5.6.6.查看集群状态

[root@localhost emqx]# emqx_ctl cluster status

Cluster status: #{running_nodes => ['emqx@192.168.2.60','emqx@192.168.2.61'],

stopped_nodes => []}1.2.3.五、双机热备

1.安装Haproxy

yum install -y haproxy1.

2.编辑配置文件

vi /etc/haproxy/haproxy.cfg1.

global log 127.0.0.1 local2 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemon # turn on stats unix socket stats socket /var/lib/haproxy/stats defaults mode http log global option httplog option dontlognull option http-server-close option forwardfor except 127.0.0.0/8 option redispatch retries 3 timeout http-request 10s timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout http-keep-alive 10s timeout check 10s maxconn 3000 #--------------------------------------------------------------------- # main frontend which proxys to the backends #--------------------------------------------------------------------- frontend mysql mode tcp bind *:6309 default_backend back_mysql backend back_mysql mode tcp balance roundrobin server db1 192.168.2.60:3306 check server db2 192.168.2.61:3306 check #frontend redis #mode tcp #bind *:6310 #default_backend back_redis #backend back_redis #mode tcp #balance roundrobin #server rdb1 192.168.2.60:6379 check #server rdb2 192.168.2.61:6379 check frontend emqx_tcp mode tcp bind *:6883 default_backend back_emqx_tcp backend back_emqx_tcp mode tcp balance roundrobin server emqx1 192.168.2.60:1883 check server emqx2 192.168.2.61:1883 check frontend emqx_api mode tcp bind *:18081 default_backend back_emqx_api backend back_emqx_api mode tcp balance roundrobin server emqx1 192.168.2.60:8081 check server emqx2 192.168.2.61:8081 check frontend emqx_bashboard mode tcp bind *:11083 default_backend back_emqx_bashboard backend back_emqx_bashboard mode tcp balance roundrobin server emqx1 192.168.2.60:18083 check server emqx2 192.168.2.61:18083 check listen admin_stats bind 0.0.0.0:4000 mode http stats uri /dbs stats realm Global\ statistics stats auth admin:Abc_1234561.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.26.27.28.29.30.31.32.33.34.35.36.37.38.39.40.41.42.43.44.45.46.47.48.49.50.51.52.53.54.55.56.57.58.59.60.61.62.63.64.65.66.67.68.69.70.71.72.73.74.75.76.77.78.79.80.81.82.83.84.85.86.87.

3.启动Haproxy

service haproxy start1.

4.访问Haproxy监控画面

另外一个服务器同样按照上述操作安装Haproxy

5.开启防火墙的VRRP协议

#开启VRRP firewall-cmd --direct --permanent --add-rule ipv4 filter INPUT 0 --protocol vrrp -j ACCEPT #应用设置 firewall-cmd --reload1.2.3.4.

6.安装Keepalived

yum install -y keepalived1.

7.编辑配置文件

vim /etc/keepalived/keepalived.conf1.

master配置

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3

weight -5

}

vrrp_instance VI_1 {

state MASTER

interface enp0s3

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.2.200 dev enp0s3

}

track_script {

check_haproxy

}

}

}1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.26.27.28.29.30.31.32.33.34.35.36.37.38.39.40.41.42.43.44.45.slave配置

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3

weight -5

}

vrrp_instance VI_1 {

state MASTER

interface enp0s3

virtual_router_id 52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.2.200 dev enp0s3

}

track_script {

check_haproxy

}

}

}1.2.3.4.5.6.7.8.9.10.11.12.13.14.15.16.17.18.19.20.21.22.23.24.25.26.27.28.29.30.31.32.33.34.35.36.37.38.39.40.41.42.43.检查haproxy状态脚本

#!/bin/bash if [ $(ps -C haproxy --no-header | wc -l) -eq 0 ];then sudo service keepalived stop fi1.2.3.4.

8.重启keepalived

service keepalived restart1.

连接数据库的时候需要使用VIP+haproxy_PORT(192.168.2.200:6309)

mysql:192.168.2.200:6309

emqx_tcp:192.168.2.200:6883

emqx_api: 192.168.2.200:18081

emqx_dashboard: 192.168.2.200:11083

在另外一个Haproxy服务器上,按照上述方法部署Keepalived

使用MySQL客户端连接192.168.2.200,执行增删改查数据

推荐本站淘宝优惠价购买喜欢的宝贝:

本文链接:https://hqyman.cn/post/6963.html 非本站原创文章欢迎转载,原创文章需保留本站地址!

微信支付宝扫一扫,打赏作者吧~

微信支付宝扫一扫,打赏作者吧~休息一下~~