首先在3台主机上的/etc/hosts里分别加上3台主机和Engine的域名解析:`在

[root@node110 ~]# vi /etc/hosts[root@node110 ~]# cat /etc/hosts172.16.100.106 engine106.com172.16.100.108 ovirt108.com172.16.100.109 node109.com172.16.100.110 node110.com127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6[root@node110 ~]# ^C

将3台主机上的/etc/yum.repos.d/目录清空,或者备份到其它目录下,这是为了清空yum源,避免后面部署过程中检查更新因网络原因导致检查失败从而导致部署失败;

[root@node110 ~]# mkdir /root/yumbak[root@node110 ~]# mv /etc/yum.repos.d/* /root/yumbak/

然后到其中一台主机上配置ssh免密码登录,注意要在后面执行部署HostedEngine过程的那台主机上配置,例如host1上:

ssh-keygen //全部默认回车即可

ssh-copy-id root@node241.com //输入root密码后回车

ssh-copy-id root@node242.com //输入root密码后回车

ssh-copy-id root@node243.com //输入root密码后回车

[root@ovirt108 ~]# ssh-keygen Generating public/private rsa key pair.Enter file in which to save the key (/root/.ssh/id_rsa):Created directory '/root/.ssh'.Enter passphrase (empty for no passphrase):Enter same passphrase again:Your identification has been saved in /root/.ssh/id_rsa.Your public key has been saved in /root/.ssh/id_rsa.pub.The key fingerprint is:SHA256:ZeSW5zkOhDCX3Xm/4zNG1jrF9npsWZGtQMA/UeQ4aYs root@ovirt108.com The key's randomart image is:+---[RSA 3072]----+| o .+oo +o || +.+o.=+. || . Bo*o..o|| = =++ oo|| S E =o o+|| o ..=*|| . +==|| o*=|| o=o|+----[SHA256]-----+[root@ovirt108 ~]# ssh-copy-id root@node108.com/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: ERROR: ssh: connect to host node108.com port 22: Connection timed out[root@ovirt108 ~]#[root@ovirt108 ~]# hostname ovirt108.com[root@ovirt108 ~]# cat /etc/hosts172.16.100.106 engine106.com172.16.100.108 ovirt108.com172.16.100.109 node109.com172.16.100.110 node110.com127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6[root@ovirt108 ~]# ssh-copy-id root@ovirt108.com/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"The authenticity of host 'ovirt108.com (172.16.100.108)' can't be established.ECDSA key fingerprint is SHA256:a0ZLuLnjkmZPQPEu5tHROYJRaAv8HLr89R47ipQNzOk.Are you sure you want to continue connecting (yes/no/[fingerprint])? yes/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@ovirt108.com's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'root@ovirt108.com'"and check to make sure that only the key(s) you wanted were added.[root@ovirt108 ~]# ssh-copy-id root@node109.com/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"The authenticity of host 'node109.com (172.16.100.109)' can't be established.ECDSA key fingerprint is SHA256:Y025SHfxJrChSnWXqknkEI7FeAB9de8Dcr4FVpKdYSk.Are you sure you want to continue connecting (yes/no/[fingerprint])? yes/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@node109.com's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'root@node109.com'"and check to make sure that only the key(s) you wanted were added.[root@ovirt108 ~]# ssh-copy-id root@node110.com/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"The authenticity of host 'node110.com (172.16.100.110)' can't be established.ECDSA key fingerprint is SHA256:yBQOh+stuvtnozYZ9byU8EC3VgBrh9ta5bzhTgRlCnk.Are you sure you want to continue connecting (yes/no/[fingerprint])? yes/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@node110.com's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'root@node110.com'"and check to make sure that only the key(s) you wanted were added.

将下载的Engine RPM包拷贝到ovirt108主机上(注意是上面我们配置的那台,可以通过scp/winscp工具),放到/root/yumbak目录下即可.安装包:

https://resources.ovirt.org/pub/ovirt-4.4/rpm/el8/x86_64/ovirt-engine-appliance-4.4-20220118150652.1.el8.x86_64.rpm

执行rpm -ivh 命令安装:

[root@ovirt108 ~]# cd /root/yumbak/[root@ovirt108 yumbak]# ls CentOS-Stream-AppStream.repo CentOS-Stream-BaseOS.repo CentOS-Stream-Debuginfo.repo CentOS-Stream-Extras.repo CentOS-Stream-HighAvailability.repo CentOS-Stream-Media.repo CentOS-Stream-NFV.repo CentOS-Stream-PowerTools.repo CentOS-Stream-RealTime.repo CentOS-Stream-ResilientStorage.repo CentOS-Stream-Sources.repo epel-modular.repo epel.repo epel-testing-modular.repo epel-testing.repo glusterfs.repo node-optional.repo ovirt-4.4-dependencies.repo ovirt-4.4.repo ovirt-engine-appliance-4.4-20220308105414.1.el8.x86_64.rpm[root@ovirt108 yumbak]# rpm -ivh ovirt-engine-appliance-4.4-20220308105414.1.el8.x86_64.rpm warning: ovirt-engine-appliance-4.4-20220308105414.1.el8.x86_64.rpm: Header V4 RSA/SHA256 Signature, key ID fe590cb7: NOKEY Verifying... ################################# [100%]Preparing... ################################# [100%]Updating / installing... 1:ovirt-engine-appliance-4.4-202203################################# [100%][root@ovirt108 yumbak]#

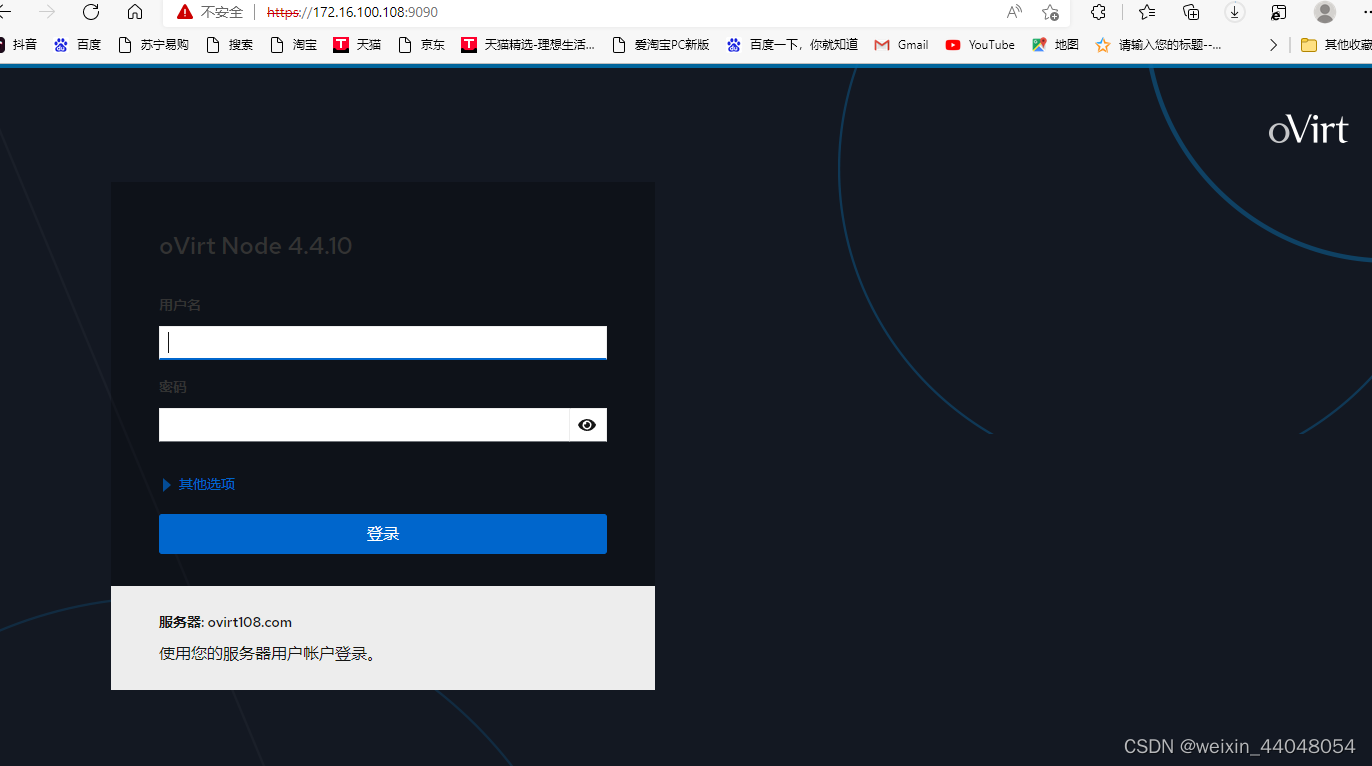

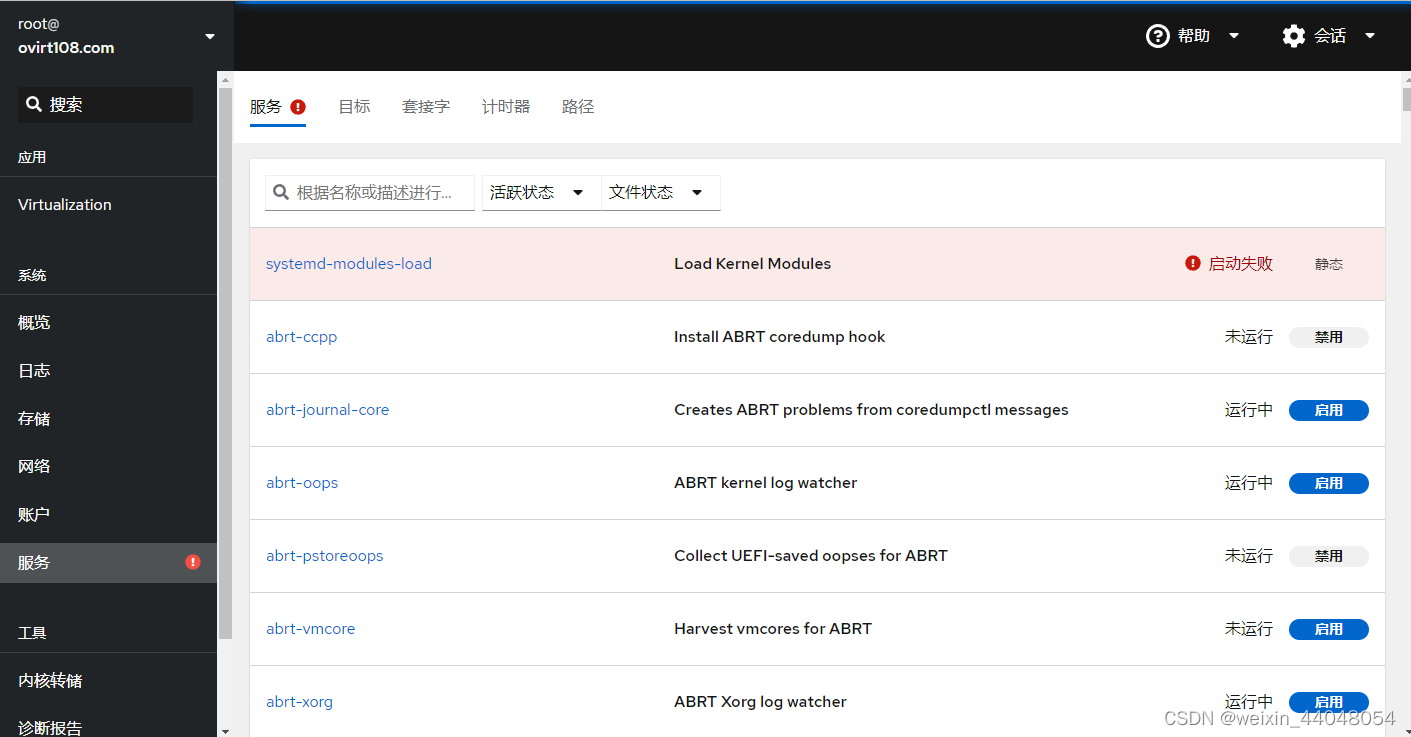

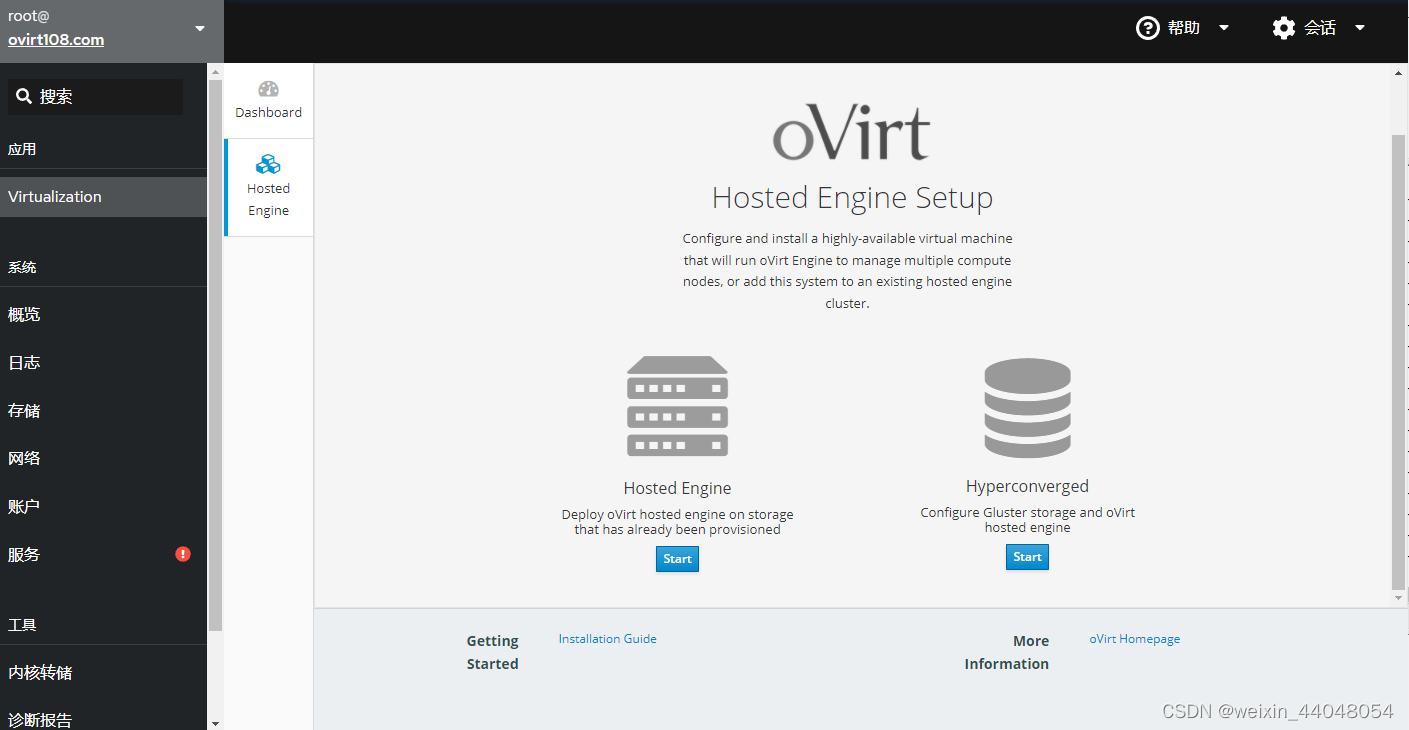

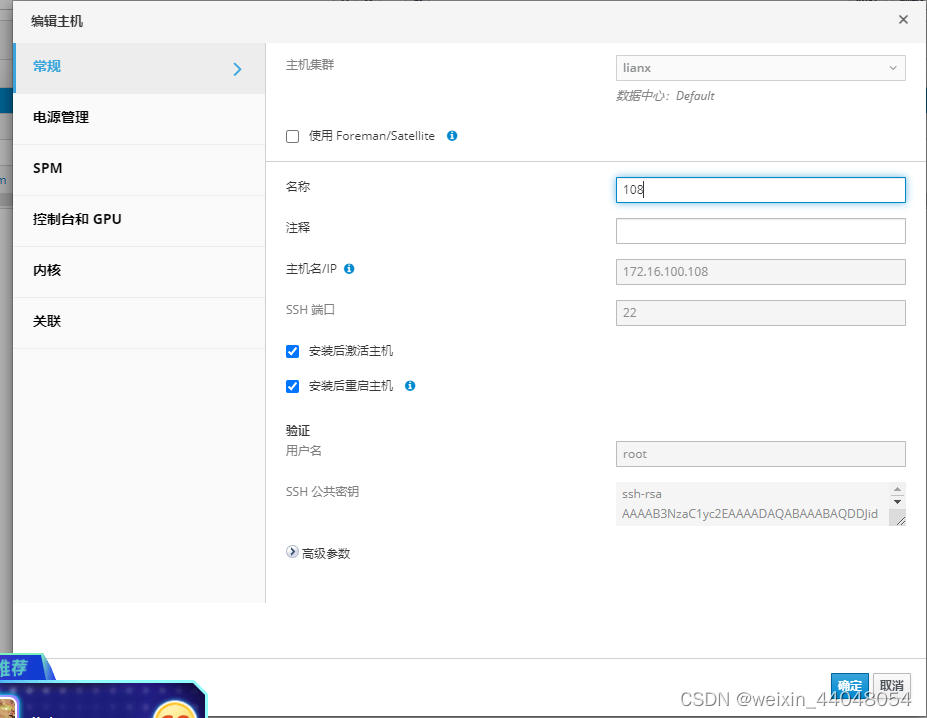

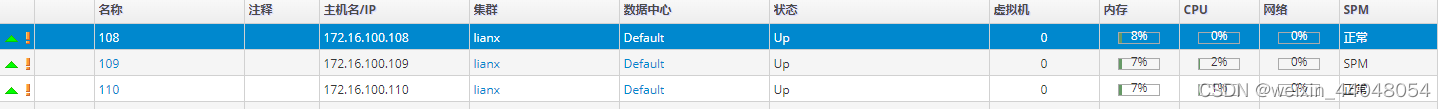

安装完成后,通过浏览器打开这台主机的Web控制台(即Cockpit),地址为:https://172.16.100.108:9090(注意IP地址替换成你实际环境的),使用root帐号登录,如下:

安装gluster

安装CentOS 7.6 1804,最小化安装,并配置好hostname与第一张网卡的IP(全部节点)

配置双网卡绑定(全部节点)

more /etc/sysconfig/network-scripts/ifcfg-bond0

DEVICE=bond0

BONDING_OPTS=‘mode=4 miimon=100 xmit_hash_policy=2’

ONBOOT=yes

DEFROUTE=no

NM_CONTROLLED=no

IPV6INIT=no

BOOTPROTO=static

IPADDR=100.100.100.4

NETMASK=255.255.255.0

more ifcfg-ens1f0

DEVICE=ens1f0

MASTER=bond0

SLAVE=yes

ONBOOT=yes

DEFROUTE=no

NM_CONTROLLED=no

IPV6INIT=no

more ifcfg-ens2f0

DEVICE=ens2f0

MASTER=bond0

SLAVE=yes

ONBOOT=yes

DEFROUTE=no

NM_CONTROLLED=no

IPV6INIT=no

编辑/etc/hosts文件(全部节点)

more /etc/hosts

172.16.10.4 ovm4.example.com ovm4

172.16.10.5 ovm5.example.com ovm5

172.16.10.6 ovm6.example.com ovm6

100.100.100.4 ovm4stor.example.com ovm4stor

100.100.100.5 ovm5stor.example.com ovm5stor

100.100.100.6 ovm6stor.example.com ovm6stor

配置完这两部,重启network,并在节点间互ping IP与FQDN,确保网络没有问题

systemctl restart network

关闭selinux,并设置时间同步(全部节点)

sed -i ‘s#SELINUX=enforcing#SELINUX=disabled#g’ /etc/selinux/config

setenforce 0

ntpdate time.windows.com

作者:运维安全专员

链接:https://www.jianshu.com/p/9287d7d53868

来源:简书

著作权归作者所有。商业转载请联系作者获得授权,非商业转载请注明出处。

配置SSH信任关系(全部节点)

[root@ovirt108 ~]# ssh-keygen -t rsa Generating public/private rsa key pair.Enter file in which to save the key (/root/.ssh/id_rsa):/root/.ssh/id_rsa already exists.Overwrite (y/n)? y Enter passphrase (empty for no passphrase):Enter same passphrase again:Your identification has been saved in /root/.ssh/id_rsa.Your public key has been saved in /root/.ssh/id_rsa.pub.The key fingerprint is:SHA256:9zG0He2GYgAzhXJ0fpR6YV2EYiXUYIv5eZryBj9xG2Q root@ovirt108.com The key's randomart image is:+---[RSA 3072]----+| .=oo.**.+o|| . o* =*o+. || o =++o. .|| .=.+E+ || S ..Oo+ o|| ..o.Bo. || .o+o o || o+ . || ... |+----[SHA256]-----+[root@ovirt108 ~]# ssh-copy-id ovirt108.com/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@ovirt108.com's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'ovirt108.com'"and check to make sure that only the key(s) you wanted were added.[root@ovirt108 ~]# ssh-copy-id node109.com/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@node109.com's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'node109.com'"and check to make sure that only the key(s) you wanted were added.[root@ovirt108 ~]# ssh-copy-id node110.com/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@node110.com's password:Number of key(s) added: 1Now try logging into the machine, with: "ssh 'node110.com'"and check to make sure that only the key(s) you wanted were added.[root@ovirt108 ~]# ssh-copy-id 172.16.100.108/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system. (if you think this is a mistake, you may want to use -f option)[root@ovirt108 ~]# ssh-copy-id 172.16.100.109/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system. (if you think this is a mistake, you may want to use -f option)[root@ovirt108 ~]# ssh-copy-id 172.16.100.110/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: WARNING: All keys were skipped because they already exist on the remote system. (if you think this is a mistake, you may want to use -f option)

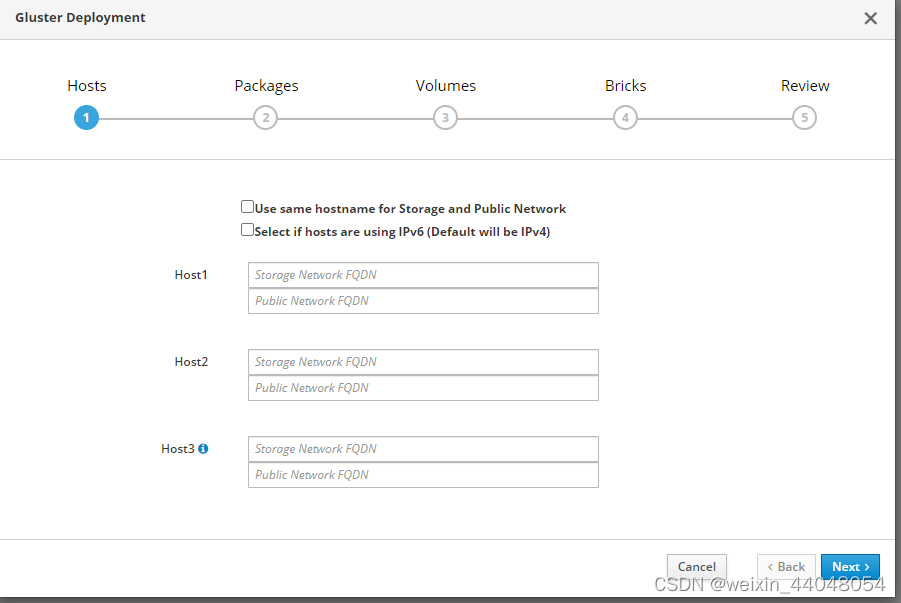

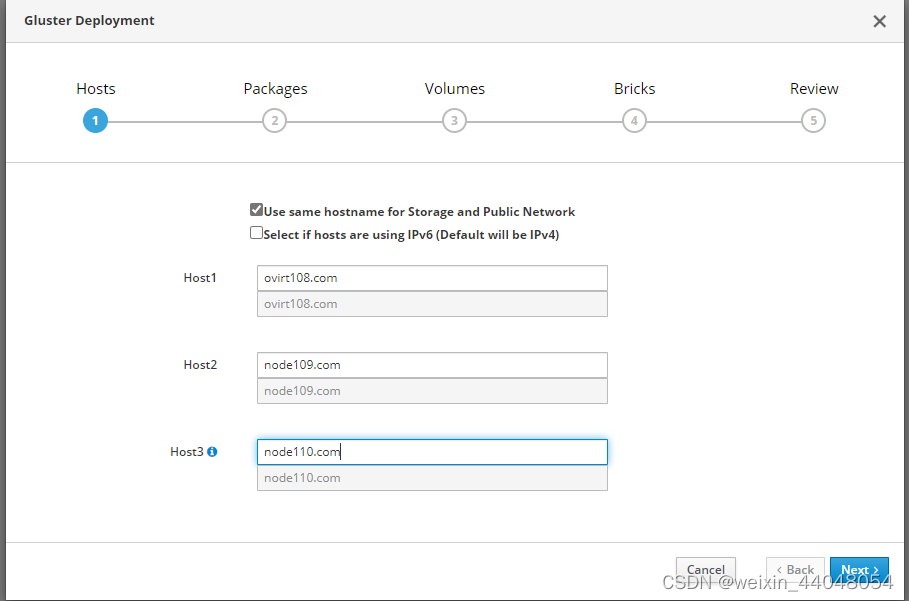

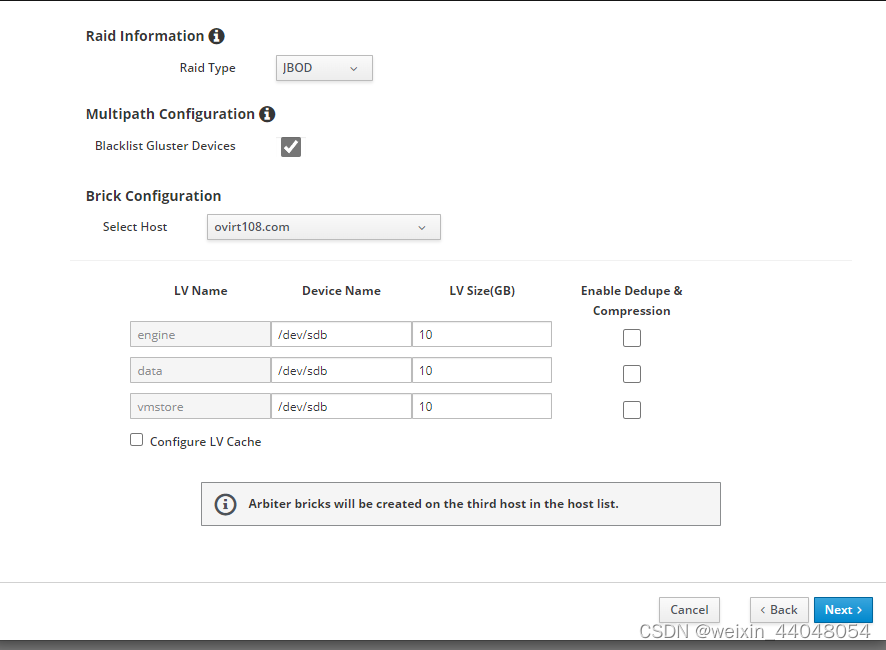

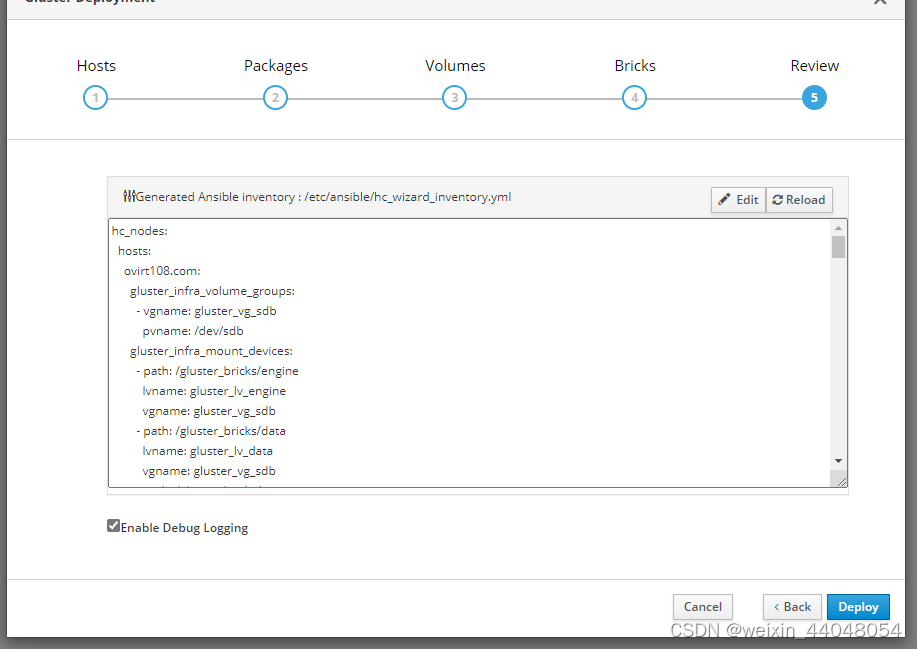

GlusterFS部署

官方文档建议配置GlusterFS要求至少三个节点,每个节点至少两个分区,系统分区与GlusterFS存储分区,

分区,格式化并挂载块文件(全部节点)

[root@ovirt108 ~]# parted /dev/sdb GNU Parted 3.2Using /dev/sdb Welcome to GNU Parted! Type 'help' to view a list of commands.(parted) ^C[root@ovirt108 ~]# fdisk -l Disk /dev/sda: 80 GiB, 85899345920 bytes, 167772160 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0xbb2642f5Device Boot Start End Sectors Size Id Type/dev/sda1 * 2048 2099199 2097152 1G 83 Linux/dev/sda2 2099200 167772159 165672960 79G 8e Linux LVM Disk /dev/sdb: 70 GiB, 75161927680 bytes, 146800640 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0xd9a04b99Device Boot Start End Sectors Size Id Type/dev/sdb1 2048 146800639 146798592 70G 83 Linux Disk /dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1: 22 GiB, 23622320128 bytes, 46137344 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 65536 bytes / 65536 bytes Disk /dev/mapper/onn-swap: 4 GiB, 4315938816 bytes, 8429568 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/mapper/onn-var_log_audit: 2 GiB, 2147483648 bytes, 4194304 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 65536 bytes / 65536 bytes Disk /dev/mapper/onn-var_log: 8 GiB, 8589934592 bytes, 16777216 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 65536 bytes / 65536 bytes Disk /dev/mapper/onn-var_crash: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 65536 bytes / 65536 bytes Disk /dev/mapper/onn-var: 15 GiB, 16106127360 bytes, 31457280 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 65536 bytes / 65536 bytes Disk /dev/mapper/onn-tmp: 1 GiB, 1073741824 bytes, 2097152 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 65536 bytes / 65536 bytes Disk /dev/mapper/onn-home: 1 GiB, 1073741824 bytes, 2097152 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 65536 bytes / 65536 bytes[root@ovirt108 ~]# mkpart-bash: mkpart: command not found[root@ovirt108 ~]# parted /dev/sdb GNU Parted 3.2Using /dev/sdb Welcome to GNU Parted! Type 'help' to view a list of commands.(parted) mkpart primary 70G -1Error: Can't have overlapping partitions.Ignore/Cancel? ^C(parted)align-check mklabel print resizepart toggle disk_set mkpart quit rm unit disk_toggle mktable rescue select version help name resize set(parted) mkpart Partition type? primary/extended? primary File system type? [ext2]? xfs Start? 0%End? 100%(parted)align-check mklabel print resizepart toggle disk_set mkpart quit rm unit disk_toggle mktable rescue select version help name resize set(parted) p Model: VMware Virtual disk (scsi)Disk /dev/sdb: 75.2GB Sector size (logical/physical): 512B/512B Partition Table: msdos Disk Flags:Number Start End Size Type File system Flags 1 1049kB 75.2GB 75.2GB primary xfs lba(parted) q Information: You may need to update /etc/fstab.[root@ovirt108 ~]# mkdir -p /gluster_bricks[root@ovirt108 ~]# blkid/dev/sr0: BLOCK_SIZE="2048" UUID="2022-03-03-08-20-27-00" LABEL="CentOS-Stream-8-x86_64-dvd" TYPE="iso9660" PTUUID="250e8015" PTTYPE="dos"/dev/sda1: UUID="22b64f77-42f9-49fe-a72e-0c620e704cf6" BLOCK_SIZE="512" TYPE="xfs" PARTUUID="bb2642f5-01"/dev/sda2: UUID="JFvK2K-XchK-WrhO-MAUS-Wxx1-271A-juwQp2" TYPE="LVM2_member" PARTUUID="bb2642f5-02"/dev/sdb1: UUID="e3ee9920-4817-49b3-be13-58f0b8ce1d65" BLOCK_SIZE="512" TYPE="xfs" PARTUUID="af52d656-01"/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1: UUID="fc6c1e69-d3c8-414c-bb4c-f851ac6f6722" BLOCK_SIZE="512" TYPE="xfs"/dev/mapper/onn-swap: UUID="951aa20c-6f8b-44b4-82da-ae2768bbb674" TYPE="swap"/dev/mapper/onn-var_log_audit: UUID="73658248-7810-45e8-85a1-3f9716f13dc6" BLOCK_SIZE="512" TYPE="xfs"/dev/mapper/onn-var_log: UUID="f6635362-4cf5-4237-bc12-66dcc3d0a479" BLOCK_SIZE="512" TYPE="xfs"/dev/mapper/onn-var_crash: UUID="6030a2ae-fb93-4270-b803-3290d795b3cc" BLOCK_SIZE="512" TYPE="xfs"/dev/mapper/onn-var: UUID="92cb454c-1cfb-4f1a-9b5b-4123e5a48734" BLOCK_SIZE="512" TYPE="xfs"/dev/mapper/onn-tmp: UUID="413bad74-04ae-4d4b-8e95-4fb04b4ebbae" BLOCK_SIZE="512" TYPE="xfs"/dev/mapper/onn-home: UUID="ecdfcc80-f9c8-4075-893b-dd358f377ec0" BLOCK_SIZE="512" TYPE="xfs"[root@ovirt108 ~]# ech0 'UUID="e3ee9920-4817-49b3-be13-58f0b8ce1d65" xfs default 0 0' >> /etc/fstab-bash: ech0: command not found[root@ovirt108 ~]# echo 'UUID="e3ee9920-4817-49b3-be13-58f0b8ce1d65" xfs default 0 0' >> /etc/fstab[root@ovirt108 ~]# mount -a && mount mount: xfs: mount point does not exist.[root@ovirt108 ~]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 3.9G 0 3.9G 0% /dev tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs 3.9G 75M 3.8G 2% /run tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 22G 6.6G 16G 30% //dev/sda1 1014M 350M 665M 35% /boot/dev/mapper/onn-home 1014M 40M 975M 4% /home/dev/mapper/onn-tmp 1014M 40M 975M 4% /tmp/dev/mapper/onn-var 15G 393M 15G 3% /var/dev/mapper/onn-var_crash 10G 105M 9.9G 2% /var/crash/dev/mapper/onn-var_log 8.0G 112M 7.9G 2% /var/log/dev/mapper/onn-var_log_audit 2.0G 60M 2.0G 3% /var/log/audit tmpfs 792M 0 792M 0% /run/user/0[root@ovirt108 ~]#

安装glusterfs组件并配置防火墙(全部节点)

yum install -y vdsm-gluster

systemctl enable --now glusterd

firewall-cmd --add-service=glusterfs --permanent

firewall-cmd --reload

``加粗样式`cpp

[root@ovirt108 ~]# yum install -y vdsm-gluster

GlusterFS is a clustered file-system capable of 1.1 kB/s | 8.1 kB 00:07

Errors during downloading metadata for repository ‘glusterfs-epel’:

Status code: 404 for https://buildlogs.centos.org/centos/8-stream/storage/x86_64/gluster-8/Packages/g/repodata/repomd.xml (IP: 3.8.21.190)

Error: Failed to download metadata for repo ‘glusterfs-epel’: Cannot download repomd.xml: Cannot download repodata/repomd.xml: All mirrors were tried

Ignoring repositories: glusterfs-epel

Last metadata expiration check: 1 day, 3:43:36 ago on Thu 19 May 2022 02:29:20 AM CST.

Package vdsm-gluster-4.40.100.2-1.el8.x86_64 is already installed.

Dependencies resolved.

Nothing to do.

Complete!

[root@ovirt108 ~]# systemctl enable --now glusterd

Created symlink /etc/systemd/system/multi-user.target.wants/glusterd.service → /usr/lib/systemd/system/glusterd.service.

[root@ovirt108 ~]# firewall-cmd --add-service=glusterfs --permanent

Warning: ALREADY_ENABLED: glusterfs

success

[root@ovirt108 ~]# firewall-cmd --reload

success

将分布式存储主机加入到信任主机池(全部节点) > # gluster peer probe ovmstor5 # gluster peer probe ovmstor6 # gluster peer probe ovmstor5.example.com # gluster peer probe ovmstor6.example.com # gluster peer probe 100.100.100.5 # gluster peer probe 100.100.100.6 ```cpp [root@ovirt108 ~]# gluster peer probe node109.com peer probe: success [root@ovirt108 ~]# gluster peer probe node110.com peer probe: success [root@ovirt108 ~]# gluster peer probe 172.16.100.109 peer probe: success [root@ovirt108 ~]# gluster peer probe 172.16.100.110 peer probe: success [root@ovirt108 ~]# gluster peer status Number of Peers: 2 Hostname: node109.com Uuid: 166bd34c-a652-4f5f-b87f-9d40c0961c6c State: Peer in Cluster (Connected) Other names: 172.16.100.109 Hostname: node110.com Uuid: 36352480-6e7f-45eb-9b69-c2118b7c15b9 State: Peer in Cluster (Connected) Other names: 172.16.100.110 [root@ovirt108 ~]#

[root@node109 ~]# systemctl enable --now glusterd Created symlink /etc/systemd/system/multi-user.target.wants/glusterd.service → /usr/lib/systemd/system/glusterd.service.[root@node109 ~]# firewall-cmd --add-service=glusterfs --permanent Warning: ALREADY_ENABLED: glusterfs success[root@node109 ~]# firewall-cmd --reload success[root@node109 ~]# gluster peer probe node110.com peer probe: Host node110.com port 24007 already in peer list[root@node109 ~]# gluster peer status Number of Peers: 2Hostname: ovirt108.com Uuid: 5aacbbcd-e085-45b0-96ab-98a72630c233 State: Peer in Cluster (Connected)Hostname: node110.com Uuid: 36352480-6e7f-45eb-9b69-c2118b7c15b9 State: Peer in Cluster (Connected)Other names:172.16.100.110

[root@node110 ~]# systemctl enable --now glusterd Created symlink /etc/systemd/system/multi-user.target.wants/glusterd.service → /usr/lib/systemd/system/glusterd.service.[root@node110 ~]# firewall-cmd --add-service=glusterfs --permanent Warning: ALREADY_ENABLED: glusterfs success[root@node110 ~]# firewall-cmd --reload success[root@node110 ~]# gluster peer status Number of Peers: 2Hostname: ovirt108.com Uuid: 5aacbbcd-e085-45b0-96ab-98a72630c233 State: Peer in Cluster (Connected)Hostname: node109.com Uuid: 166bd34c-a652-4f5f-b87f-9d40c0961c6c State: Peer in Cluster (Connected)Other names:172.16.100.109[root@node110 ~]#

修改

[root@ovirt108 /]# vi /etc/fstab[root@ovirt108 /]# mount -a && mount sysfs on /sys type sysfs (rw,nosuid,nodev,noexec,relatime)proc on /proc type proc (rw,nosuid,nodev,noexec,relatime)devtmpfs on /dev type devtmpfs (rw,nosuid,size=3997348k,nr_inodes=999337,mode=755)securityfs on /sys/kernel/security type securityfs (rw,nosuid,nodev,noexec,relatime)tmpfs on /dev/shm type tmpfs (rw,nosuid,nodev)devpts on /dev/pts type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=000)tmpfs on /run type tmpfs (rw,nosuid,nodev,mode=755)tmpfs on /sys/fs/cgroup type tmpfs (ro,nosuid,nodev,noexec,mode=755)cgroup on /sys/fs/cgroup/systemd type cgroup (rw,nosuid,nodev,noexec,relatime,xattr,release_agent=/usr/lib/systemd/systemd-cgroups-agent,name=systemd)pstore on /sys/fs/pstore type pstore (rw,nosuid,nodev,noexec,relatime)bpf on /sys/fs/bpf type bpf (rw,nosuid,nodev,noexec,relatime,mode=700)cgroup on /sys/fs/cgroup/hugetlb type cgroup (rw,nosuid,nodev,noexec,relatime,hugetlb)cgroup on /sys/fs/cgroup/blkio type cgroup (rw,nosuid,nodev,noexec,relatime,blkio)cgroup on /sys/fs/cgroup/memory type cgroup (rw,nosuid,nodev,noexec,relatime,memory)cgroup on /sys/fs/cgroup/rdma type cgroup (rw,nosuid,nodev,noexec,relatime,rdma)cgroup on /sys/fs/cgroup/freezer type cgroup (rw,nosuid,nodev,noexec,relatime,freezer)cgroup on /sys/fs/cgroup/pids type cgroup (rw,nosuid,nodev,noexec,relatime,pids)cgroup on /sys/fs/cgroup/net_cls,net_prio type cgroup (rw,nosuid,nodev,noexec,relatime,net_cls,net_prio)cgroup on /sys/fs/cgroup/devices type cgroup (rw,nosuid,nodev,noexec,relatime,devices)cgroup on /sys/fs/cgroup/cpuset type cgroup (rw,nosuid,nodev,noexec,relatime,cpuset)cgroup on /sys/fs/cgroup/cpu,cpuacct type cgroup (rw,nosuid,nodev,noexec,relatime,cpu,cpuacct)cgroup on /sys/fs/cgroup/perf_event type cgroup (rw,nosuid,nodev,noexec,relatime,perf_event)configfs on /sys/kernel/config type configfs (rw,relatime)/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 on / type xfs (rw,relatime,attr2,discard,inode64,logbufs=8,logbsize=64k,sunit=128,swidth=128,noquota)systemd-1 on /proc/sys/fs/binfmt_misc type autofs (rw,relatime,fd=29,pgrp=1,timeout=0,minproto=5,maxproto=5,direct,pipe_ino=21173)debugfs on /sys/kernel/debug type debugfs (rw,relatime)mqueue on /dev/mqueue type mqueue (rw,relatime)hugetlbfs on /dev/hugepages type hugetlbfs (rw,relatime,pagesize=2M)/dev/sda1 on /boot type xfs (rw,relatime,attr2,inode64,logbufs=8,logbsize=32k,noquota)/dev/mapper/onn-home on /home type xfs (rw,relatime,attr2,discard,inode64,logbufs=8,logbsize=64k,sunit=128,swidth=128,noquota)/dev/mapper/onn-tmp on /tmp type xfs (rw,relatime,attr2,discard,inode64,logbufs=8,logbsize=64k,sunit=128,swidth=128,noquota)/dev/mapper/onn-var on /var type xfs (rw,relatime,attr2,discard,inode64,logbufs=8,logbsize=64k,sunit=128,swidth=128,noquota)/dev/mapper/onn-var_log on /var/log type xfs (rw,relatime,attr2,discard,inode64,logbufs=8,logbsize=64k,sunit=128,swidth=128,noquota)/dev/mapper/onn-var_crash on /var/crash type xfs (rw,relatime,attr2,discard,inode64,logbufs=8,logbsize=64k,sunit=128,swidth=128,noquota)/dev/mapper/onn-var_log_audit on /var/log/audit type xfs (rw,relatime,attr2,discard,inode64,logbufs=8,logbsize=64k,sunit=128,swidth=128,noquota)sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw,relatime)tmpfs on /run/user/0 type tmpfs (rw,nosuid,nodev,relatime,size=810124k,mode=700)/dev/sdb1 on /gluster_bricks type xfs (rw,relatime,attr2,inode64,logbufs=8,logbsize=32k,noquota)[root@ovirt108 /]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 3.9G 0 3.9G 0% /dev tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs 3.9G 17M 3.9G 1% /run tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 22G 6.6G 16G 30% //dev/sda1 1014M 350M 665M 35% /boot/dev/mapper/onn-home 1014M 40M 975M 4% /home/dev/mapper/onn-tmp 1014M 40M 975M 4% /tmp/dev/mapper/onn-var 15G 393M 15G 3% /var/dev/mapper/onn-var_log 8.0G 113M 7.9G 2% /var/log/dev/mapper/onn-var_crash 10G 105M 9.9G 2% /var/crash/dev/mapper/onn-var_log_audit 2.0G 52M 2.0G 3% /var/log/audit tmpfs 792M 0 792M 0% /run/user/0/dev/sdb1 70G 532M 70G 1% /gluster_bricks[root@ovirt108 /]# cat /etc/fstab # # /etc/fstab# Created by anaconda on Tue May 17 21:32:01 2022## Accessible filesystems, by reference, are maintained under '/dev/disk/'.# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info.## After editing this file, run 'systemctl daemon-reload' to update systemd# units generated from this file.#/dev/onn/ovirt-node-ng-4.4.10.2-0.20220303.0+1 / xfs defaults,discard 0 0UUID=22b64f77-42f9-49fe-a72e-0c620e704cf6 /boot xfs defaults 0 0/dev/mapper/onn-home /home xfs defaults,discard 0 0/dev/mapper/onn-tmp /tmp xfs defaults,discard 0 0/dev/mapper/onn-var /var xfs defaults,discard 0 0/dev/mapper/onn-var_crash /var/crash xfs defaults,discard 0 0/dev/mapper/onn-var_log /var/log xfs defaults,discard 0 0/dev/mapper/onn-var_log_audit /var/log/audit xfs defaults,discard 0 0/dev/mapper/onn-swap none swap defaults 0 0UUID="e3ee9920-4817-49b3-be13-58f0b8ce1d65" /gluster_bricks xfs defaults 0 0[root@ovirt108 /]#

[root@node110 /]# mount -a && mount mount: /gluster_bricks: can't find UUID="039ddd2b-de5e-4741-a6f0-81101d293bbf".[root@node110 /]# parted /dev/sdb GNU Parted 3.2Using /dev/sdb Welcome to GNU Parted! Type 'help' to view a list of commands.(parted) mkpart Partition name? []?File system type? [ext2]? ^C Error: Expecting a file system type.(parted) mkpart primary File system type? [ext2]? xfs Start? 0%End? 100%Warning: You requested a partition from 0.00B to 75.2GB (sectors 0..146800639).The closest location we can manage is 17.4kB to 1048kB (sectors 34..2047).Is this still acceptable to you?Yes/No? yes Warning: The resulting partition is not properly aligned for best performance:34s % 2048s != 0s Ignore/Cancel? I(parted) p Model: VMware Virtual disk (scsi)Disk /dev/sdb: 75.2GB Sector size (logical/physical): 512B/512B Partition Table: gpt Disk Flags:Number Start End Size File system Name Flags 2 17.4kB 1049kB 1031kB xfs primary 1 1049kB 75.2GB 75.2GB xfs(parted)align-check mklabel print resizepart toggle disk_set mkpart quit rm unit disk_toggle mktable rescue select version help name resize set(parted) rm Partition number? 2(parted) p Model: VMware Virtual disk (scsi)Disk /dev/sdb: 75.2GB Sector size (logical/physical): 512B/512B Partition Table: gpt Disk Flags:Number Start End Size File system Name Flags 1 1049kB 75.2GB 75.2GB xfs(parted) rm Partition number? 1(parted) p Model: VMware Virtual disk (scsi)Disk /dev/sdb: 75.2GB Sector size (logical/physical): 512B/512B Partition Table: gpt Disk Flags:Number Start End Size File system Name Flags(parted) mkpart primary File system type? [ext2]? xfs Start? 0%End? 100%(parted) p Model: VMware Virtual disk (scsi)Disk /dev/sdb: 75.2GB Sector size (logical/physical): 512B/512B Partition Table: gpt Disk Flags:Number Start End Size File system Name Flags 1 1049kB 75.2GB 75.2GB xfs primary(parted) q Information: You may need to update /etc/fstab.[root@node110 /]# blkid/dev/mapper/onn-var_crash: UUID="89a62c3c-7537-4b6b-92f3-0526a0148e16" BLOCK_SIZE="512" TYPE="xfs"/dev/sda2: UUID="DuV2GD-R4jV-MVch-9Okw-AOyq-yE71-59mvOs" TYPE="LVM2_member" PARTUUID="29902ec3-02"/dev/sda1: UUID="0c3b78f9-431b-47e8-9b14-9c9b58c97932" BLOCK_SIZE="512" TYPE="xfs" PARTUUID="29902ec3-01"/dev/sdb1: UUID="ac77a11c-6175-46d8-82ef-5d41b46c0a27" BLOCK_SIZE="512" TYPE="xfs" PARTLABEL="primary" PARTUUID="022236da-11ef-47a9-b609-aa29f50244c2"/dev/sr0: BLOCK_SIZE="2048" UUID="2022-03-03-08-20-27-00" LABEL="CentOS-Stream-8-x86_64-dvd" TYPE="iso9660" PTUUID="250e8015" PTTYPE="dos"/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1: UUID="08903906-6000-4038-b224-c9ad76505867" BLOCK_SIZE="512" TYPE="xfs"/dev/mapper/onn-swap: UUID="d984a2f6-da82-496f-a755-e9aaf596c86d" TYPE="swap"/dev/mapper/onn-var_log_audit: UUID="ece1a2a3-0fcb-4fb5-a68a-3c92384a8ddc" BLOCK_SIZE="512" TYPE="xfs"/dev/mapper/onn-var_log: UUID="1f4963af-d0b8-4c69-92c4-f424bbf75805" BLOCK_SIZE="512" TYPE="xfs"/dev/mapper/onn-var: UUID="7ed192e6-48e4-47c4-9895-267f132ae459" BLOCK_SIZE="512" TYPE="xfs"/dev/mapper/onn-tmp: UUID="b019f539-b01d-4fc4-ad5d-3c0cfbdb3984" BLOCK_SIZE="512" TYPE="xfs"/dev/mapper/onn-home: UUID="9bf6c9e7-bbcd-4da6-bc04-b3794e7a1b8d" BLOCK_SIZE="512" TYPE="xfs"[root@node110 /]# echo 'UUID="ac77a11c-6175-46d8-82ef-5d41b46c0a27" /gluster_bricks xfs defaults 0 0' >> /etc/fstab[root@node110 /]# vi /etc/fstab[root@node110 /]# mount -a && mount sysfs on /sys type sysfs (rw,nosuid,nodev,noexec,relatime)proc on /proc type proc (rw,nosuid,nodev,noexec,relatime)devtmpfs on /dev type devtmpfs (rw,nosuid,size=3997568k,nr_inodes=999392,mode=755)securityfs on /sys/kernel/security type securityfs (rw,nosuid,nodev,noexec,relatime)tmpfs on /dev/shm type tmpfs (rw,nosuid,nodev)devpts on /dev/pts type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=000)tmpfs on /run type tmpfs (rw,nosuid,nodev,mode=755)tmpfs on /sys/fs/cgroup type tmpfs (ro,nosuid,nodev,noexec,mode=755)cgroup on /sys/fs/cgroup/systemd type cgroup (rw,nosuid,nodev,noexec,relatime,xattr,release_agent=/usr/lib/systemd/systemd-cgroups-agent,name=systemd)pstore on /sys/fs/pstore type pstore (rw,nosuid,nodev,noexec,relatime)bpf on /sys/fs/bpf type bpf (rw,nosuid,nodev,noexec,relatime,mode=700)cgroup on /sys/fs/cgroup/devices type cgroup (rw,nosuid,nodev,noexec,relatime,devices)cgroup on /sys/fs/cgroup/net_cls,net_prio type cgroup (rw,nosuid,nodev,noexec,relatime,net_cls,net_prio)cgroup on /sys/fs/cgroup/blkio type cgroup (rw,nosuid,nodev,noexec,relatime,blkio)cgroup on /sys/fs/cgroup/cpu,cpuacct type cgroup (rw,nosuid,nodev,noexec,relatime,cpu,cpuacct)cgroup on /sys/fs/cgroup/rdma type cgroup (rw,nosuid,nodev,noexec,relatime,rdma)cgroup on /sys/fs/cgroup/hugetlb type cgroup (rw,nosuid,nodev,noexec,relatime,hugetlb)cgroup on /sys/fs/cgroup/perf_event type cgroup (rw,nosuid,nodev,noexec,relatime,perf_event)cgroup on /sys/fs/cgroup/memory type cgroup (rw,nosuid,nodev,noexec,relatime,memory)cgroup on /sys/fs/cgroup/pids type cgroup (rw,nosuid,nodev,noexec,relatime,pids)cgroup on /sys/fs/cgroup/freezer type cgroup (rw,nosuid,nodev,noexec,relatime,freezer)cgroup on /sys/fs/cgroup/cpuset type cgroup (rw,nosuid,nodev,noexec,relatime,cpuset)configfs on /sys/kernel/config type configfs (rw,relatime)/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 on / type xfs (rw,relatime,attr2,discard,inode64,logbufs=8,logbsize=64k,sunit=128,swidth=128,noquota)systemd-1 on /proc/sys/fs/binfmt_misc type autofs (rw,relatime,fd=36,pgrp=1,timeout=0,minproto=5,maxproto=5,direct,pipe_ino=23337)hugetlbfs on /dev/hugepages type hugetlbfs (rw,relatime,pagesize=2M)mqueue on /dev/mqueue type mqueue (rw,relatime)debugfs on /sys/kernel/debug type debugfs (rw,relatime)/dev/sda1 on /boot type xfs (rw,relatime,attr2,inode64,logbufs=8,logbsize=32k,noquota)/dev/mapper/onn-tmp on /tmp type xfs (rw,relatime,attr2,discard,inode64,logbufs=8,logbsize=64k,sunit=128,swidth=128,noquota)/dev/mapper/onn-home on /home type xfs (rw,relatime,attr2,discard,inode64,logbufs=8,logbsize=64k,sunit=128,swidth=128,noquota)/dev/mapper/onn-var on /var type xfs (rw,relatime,attr2,discard,inode64,logbufs=8,logbsize=64k,sunit=128,swidth=128,noquota)/dev/mapper/onn-var_crash on /var/crash type xfs (rw,relatime,attr2,discard,inode64,logbufs=8,logbsize=64k,sunit=128,swidth=128,noquota)/dev/mapper/onn-var_log on /var/log type xfs (rw,relatime,attr2,discard,inode64,logbufs=8,logbsize=64k,sunit=128,swidth=128,noquota)/dev/mapper/onn-var_log_audit on /var/log/audit type xfs (rw,relatime,attr2,discard,inode64,logbufs=8,logbsize=64k,sunit=128,swidth=128,noquota)sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw,relatime)tmpfs on /run/user/0 type tmpfs (rw,nosuid,nodev,relatime,size=810168k,mode=700)/dev/sdb1 on /gluster_bricks type xfs (rw,relatime,attr2,inode64,logbufs=8,logbsize=32k,noquota)[root@node110 /]# df -h Filesystem Size Used Avail Use% Mounted on devtmpfs 3.9G 0 3.9G 0% /dev tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs 3.9G 17M 3.9G 1% /run tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 22G 3.5G 19G 16% //dev/sda1 1014M 350M 665M 35% /boot/dev/mapper/onn-tmp 1014M 40M 975M 4% /tmp/dev/mapper/onn-home 1014M 40M 975M 4% /home/dev/mapper/onn-var 15G 361M 15G 3% /var/dev/mapper/onn-var_crash 10G 105M 9.9G 2% /var/crash/dev/mapper/onn-var_log 8.0G 125M 7.9G 2% /var/log/dev/mapper/onn-var_log_audit 2.0G 51M 2.0G 3% /var/log/audit tmpfs 792M 0 792M 0% /run/user/0/dev/sdb1 70G 532M 70G 1% /gluster_bricks[root@node110 /]# cat /etc/fstab # # /etc/fstab# Created by anaconda on Wed May 18 09:15:37 2022## Accessible filesystems, by reference, are maintained under '/dev/disk/'.# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info.## After editing this file, run 'systemctl daemon-reload' to update systemd# units generated from this file.#/dev/onn/ovirt-node-ng-4.4.10.2-0.20220303.0+1 / xfs defaults,discard 0 0UUID=0c3b78f9-431b-47e8-9b14-9c9b58c97932 /boot xfs defaults 0 0/dev/mapper/onn-home /home xfs defaults,discard 0 0/dev/mapper/onn-tmp /tmp xfs defaults,discard 0 0/dev/mapper/onn-var /var xfs defaults,discard 0 0/dev/mapper/onn-var_crash /var/crash xfs defaults,discard 0 0/dev/mapper/onn-var_log /var/log xfs defaults,discard 0 0/dev/mapper/onn-var_log_audit /var/log/audit xfs defaults,discard 0 0/dev/mapper/onn-swap none swap defaults 0 0UUID="ac77a11c-6175-46d8-82ef-5d41b46c0a27" /gluster_bricks xfs defaults 0 0[root@node110 /]#

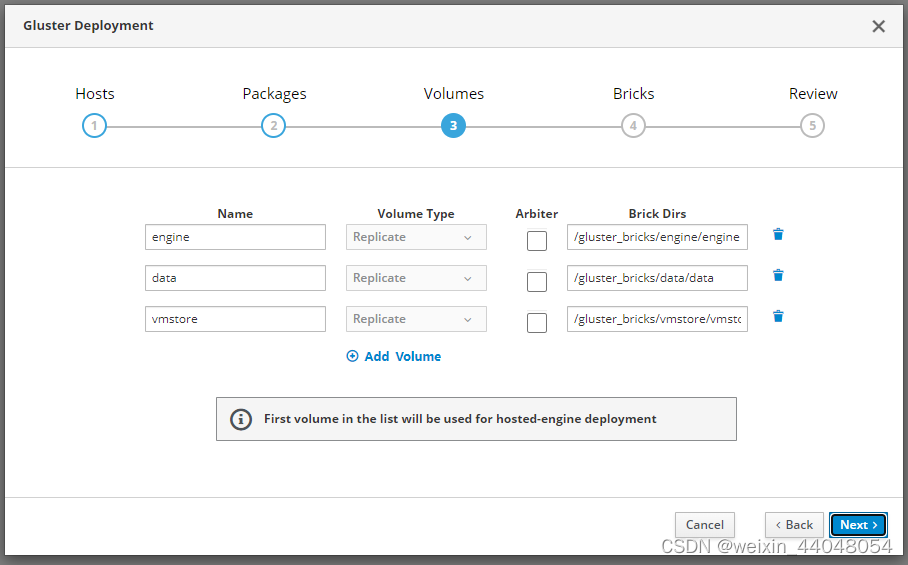

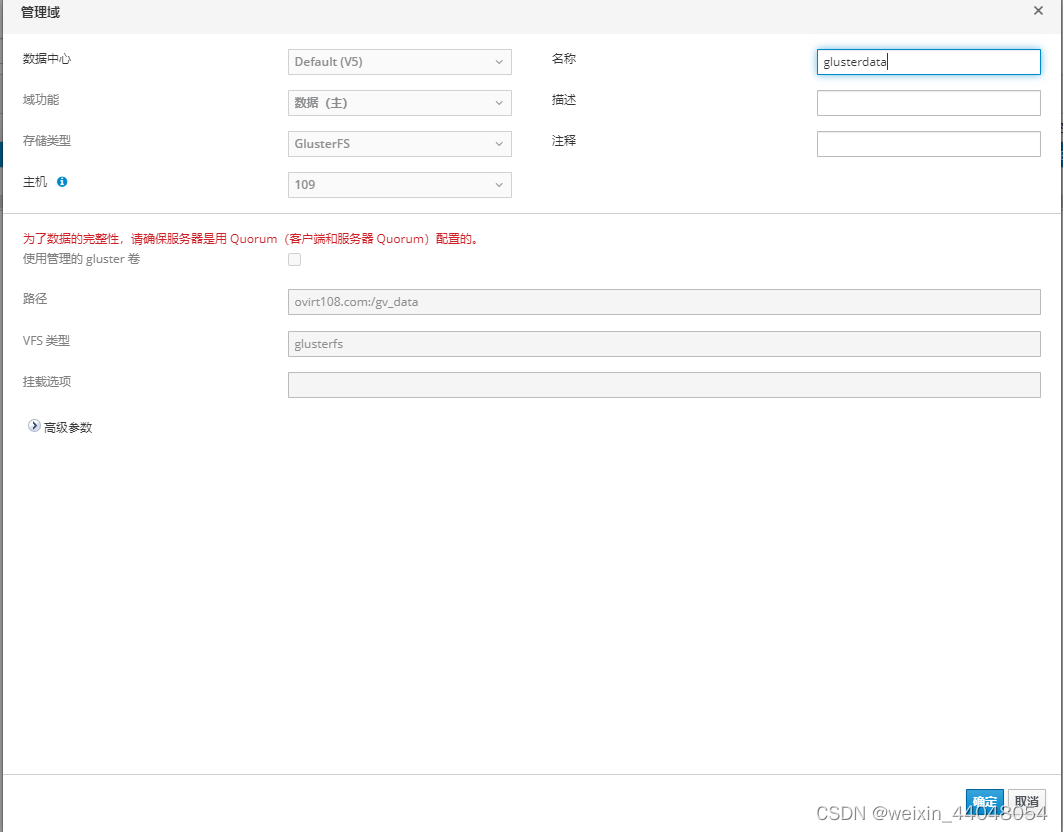

配置3副本的GlusterFS复制卷(ovm4节点)

mkdir -p /gluster_bricks/data/ #这一步要在全部节点上先执行

gluster volume create gv_data replica 3 transport tcp vm4stor:/gluster_bricks/data/ ovm5stor:/gluster_bricks/data/ ovm6stor:/gluster_bricks/data/ #此处配置的主机名为双网卡绑定IP所对应的主机名

gluster volume start gv_data #启动Gluster卷

gluster volume info #可在所有节点上执行,确认Gluster卷状态

Volume Name: gv_data Type: Replicate Volume ID:

a80edc75-e322-488b-a0be-287dcf7008de Status: Started Snapshot Count: 0

Number of Bricks: 1 x 3 = 3 Transport-type: tcp Bricks: Brick1:

ovm4stor:/gluster_bricks/data Brick2: ovm5stor:/gluster_bricks/data

Brick3: ovm6stor:/gluster_bricks/data Options Reconfigured:

performance.client-io-threads: off nfs.disable: on

transport.address-family: inet

[root@ovirt108 /]# gluster volume create gv_data replica 3 transport tcp ovirt108.com:/gluster_bricks/data/ node109.com:/gluster_bricks/data/ node110.com:/gluster_bricks/data/volume create: gv_data: success: please start the volume to access data[root@ovirt108 /]#[root@ovirt108 /]# ^C[root@ovirt108 /]# gluster volume start gv_data volume start: gv_data: success[root@ovirt108 /]# gluster volume info Volume Name: gv_data Type: Replicate Volume ID: 46e72530-615d-46de-a5cd-ddb82a366cdf Status: Started Snapshot Count: 0Number of Bricks: 1 x 3 = 3Transport-type: tcp Bricks:Brick1: ovirt108.com:/gluster_bricks/data Brick2: node109.com:/gluster_bricks/data Brick3: node110.com:/gluster_bricks/data Options Reconfigured:storage.fips-mode-rchecksum: on transport.address-family: inet nfs.disable: on performance.client-io-threads: off[root@ovirt108 /]#

修改权限

[root@ovirt108 ~]# cd /gluster_bricks/[root@ovirt108 gluster_bricks]# ll total 0drwxr-xr-x 3 root root 24 May 20 06:43 data[root@ovirt108 gluster_bricks]# cd ..[root@ovirt108 /]# ll total 24lrwxrwxrwx. 1 root root 7 Jun 22 2021 bin -> usr/bin dr-xr-xr-x. 7 root root 4096 May 20 10:41 boot drwxr-xr-x. 3 root root 20 Mar 3 16:14 data drwxr-xr-x 19 root root 3300 May 20 10:41 dev drwxr-xr-x. 134 root root 12288 May 20 10:41 etc drwxr-xr-x 3 root root 18 May 20 06:43 gluster_bricks drwxr-xr-x. 2 root root 6 Jun 22 2021 home lrwxrwxrwx. 1 root root 7 Jun 22 2021 lib -> usr/lib lrwxrwxrwx. 1 root root 9 Jun 22 2021 lib64 -> usr/lib64 drwx------. 2 root root 6 Mar 3 16:03 lost+found drwxr-xr-x. 2 root root 6 Jun 22 2021 media drwxr-xr-x. 2 root root 6 Jun 22 2021 mnt drwxr-xr-x. 2 root root 6 Jun 22 2021 opt dr-xr-xr-x 278 root root 0 May 20 10:41 proc drwxr-xr-x. 3 root root 25 Mar 3 16:14 rhev dr-xr-x---. 5 root root 200 May 19 05:04 root drwxr-xr-x 43 root root 1340 May 20 10:41 run lrwxrwxrwx. 1 root root 8 Jun 22 2021 sbin -> usr/sbin drwxr-xr-x. 2 root root 6 Jun 22 2021 srv dr-xr-xr-x 13 root root 0 May 20 10:41 sys drwxrwxrwt. 8 root root 223 May 20 12:02 tmp drwxr-xr-x. 13 root root 158 Mar 3 16:10 usr drwxr-xr-x. 21 root root 4096 May 18 05:50 var[root@ovirt108 /]# chown vdsm:kvm /gluster_bricks/ -R[root@ovirt108 /]#[root@ovirt108 /]# gluster volume info Volume Name: gv_data Type: Replicate Volume ID: 46e72530-615d-46de-a5cd-ddb82a366cdf Status: Started Snapshot Count: 0Number of Bricks: 1 x 3 = 3Transport-type: tcp Bricks:Brick1: ovirt108.com:/gluster_bricks/data Brick2: node109.com:/gluster_bricks/data Brick3: node110.com:/gluster_bricks/data Options Reconfigured:performance.client-io-threads: off nfs.disable: on transport.address-family: inet storage.fips-mode-rchecksum: on[root@ovirt108 /]# gluster volume start gv_data volume start: gv_data: failed: Volume gv_data already started[root@ovirt108 /]#

推荐本站淘宝优惠价购买喜欢的宝贝:

本文链接:https://hqyman.cn/post/7054.html 非本站原创文章欢迎转载,原创文章需保留本站地址!

微信支付宝扫一扫,打赏作者吧~

微信支付宝扫一扫,打赏作者吧~休息一下~~