#下载wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh -O ~/miniconda.sh#安装,该操作会下载800m 的文件bash miniconda.sh #安装完成提示==> For changes to take effect, close and re-open your current shell. <==Thank you for installing Miniconda3!

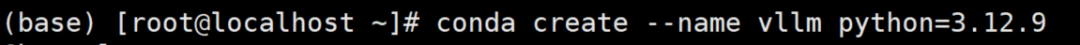

conda create --name vllm python=3.12.9

(base) [root@MiWiFi-RD03-srv ~]# conda activate vllm(vllm) [root@MiWiFi-RD03-srv ~]#

#安装vLLM,大概会产生8G的内容。#我这里加速用华为会报错,用阿里则没问题。(vllm) [root@MiWiFi-RD03-srv ~]# pip install vllm -i https://mirrors.huaweicloud.com/repository/pypi/simpleLooking in indexes: https://mirrors.huaweicloud.com/repository/pypi/simpleCollecting vllm

#国内镜像站https://hf-mirror.com/deepseek-ai/DeepSeek-R1

#下载一个python包,用于下载模型(vllm) [root@MiWiFi-RD03-srv ~]# pip install modelscope -i https://mirrors.huaweicloud.com/repository/pypi/simpleLooking in indexes: https://mirrors.huaweicloud.com/repository/pypi/simpleCollecting modelscope

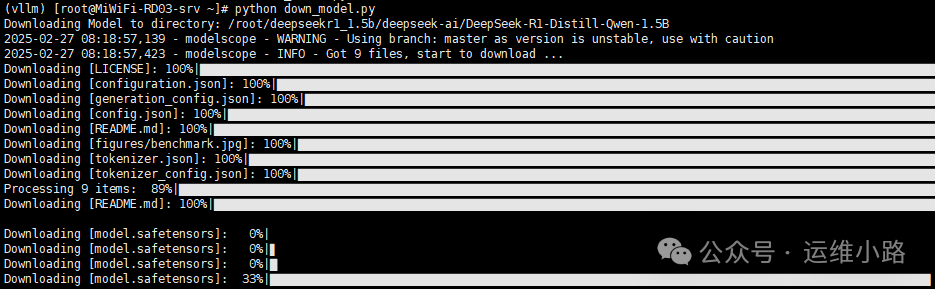

#创建下载文件vi down_model.py#下载代码from modelscope import snapshot_downloadmodel_dir = snapshot_download('deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B',cache_dir='/root/deepseekr1_1.5b',revision='master')#执行下载python down_model.py

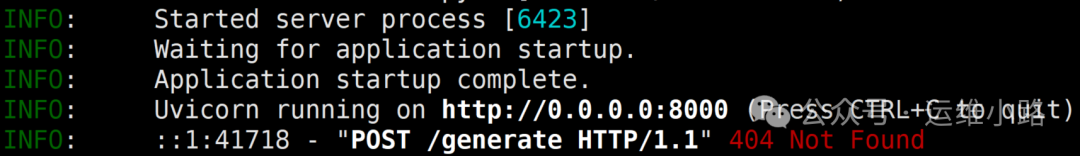

最后的参数是我这个显卡性能不行,才需要加的,默认只要本地路径即可。vllm serve \/root/deepseekr1_1.5b/deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B \--dtype=half

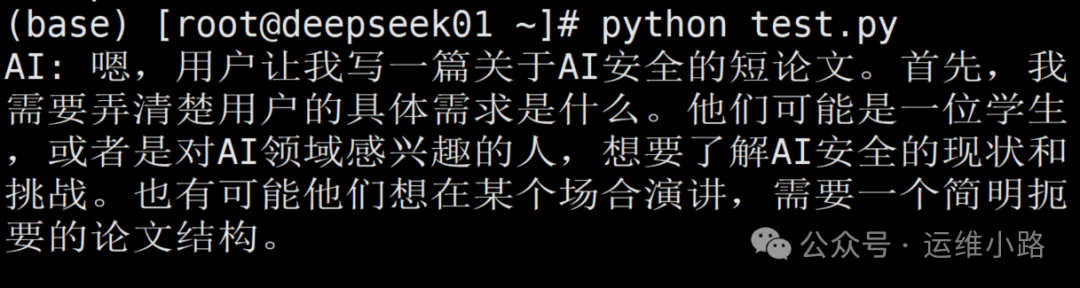

import requestsimport jsondef stream_chat_response():response = requests.post("http://localhost:8000/v1/chat/completions",json={"model": "/root/deepseekr1_1.5b/deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B","messages": [{"role": "user", "content": "写一篇关于AI安全的短论文"}],"stream": True,"temperature": 0.7},stream=True)print("AI: ", end="", flush=True) # 初始化输出前缀full_response = []try:for chunk in response.iter_lines():if chunk:# 处理数据帧decoded_chunk = chunk.decode('utf-8').strip()if decoded_chunk.startswith('data: '):json_data = decoded_chunk[6:] # 去除"data: "前缀try:data = json.loads(json_data)if 'choices' in data and len(data['choices']) > 0:delta = data['choices'][0].get('delta', {})# 提取内容片段content = delta.get('content', '')if content:print(content, end='', flush=True) # 实时流式输出full_response.append(content)# 检测生成结束if data['choices'][0].get('finish_reason'):print("\n") # 生成结束时换行except json.JSONDecodeError:pass # 忽略不完整JSON数据except KeyboardInterrupt:print("\n\n[用户中断了生成]")return ''.join(full_response)# 执行对话if __name__ == "__main__":result = stream_chat_response()print("\n--- 完整响应 ---")print(result)

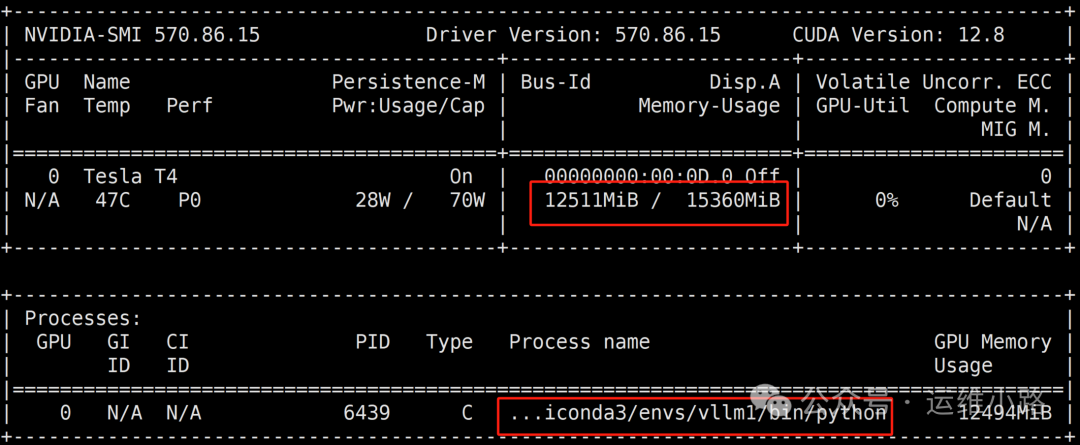

#类似安装源,下载地址和方法参考上小节dpkg -i nvidia-driver-local-repo-ubuntu2004-570.86.15_1.0-1_amd64.debapt-get update#安装驱动,甚至都不用安装cuda驱动就可以,安装完成系统需要重启系统apt-get install nvidia-driver-570

推荐本站淘宝优惠价购买喜欢的宝贝:

本文链接:https://hqyman.cn/post/9747.html 非本站原创文章欢迎转载,原创文章需保留本站地址!

休息一下~~

微信支付宝扫一扫,打赏作者吧~

微信支付宝扫一扫,打赏作者吧~